‘Platform Democracy’—a very different way to govern powerful tech

Facebook is trying ~ it. Twitter, Google, OpenAI, and other companies should too.

Update: Since this has come up a few times:

Yes, the following also applies to AI companies and organizations that have thorny questions such as “What guardrails do we need in place to release an AI model?, “What values should the system be aligned to?”, and “How should we tradeoff openness vs. safety in AI model development and release?"

Making this happen around AI is my primary focus right now (and was always an ultimate aim—see Governance of AI, with AI, through deliberative democracy for more on this).I should also re-emphasize that this is not a ‘tell-all account’; I received permission to share details about the Facebook/Meta and Twitter efforts.

This is meant to be a general audience overview of the proposal described in the paper shown below, and an update on adoption by Twitter and Meta.

Last winter (Jan 2022+), I helped Twitter almost run a pilot of a democratic process for developing its policies—Elon’s acquisition bid killed it (unintentionally). I’ll be sharing more on that project below.

I’m Aviv Ovadya a technologist and researcher working to reimagine how we interact with technology; affiliated with Harvard’s Berkman Klein Center and Cambridge University’s Center for the Future of Intelligence.1 My goal in this newsletter is to share useful ideas and actionable hope at the messy intersection of technology and society—positive visions of a world where technology is ‘good for people’ and concrete actions that may help take us there.

In this piece, I explore one direction that I believe is both crucial and hopeful—and some exciting news about recent steps forward through both successful (and thwarted) platform adoption.

Problem: Platforms are powerful, unaccountable, and stuck

For the last six years, I've spent a lot of my time trying to get platforms like Facebook and YouTube to make changes to address challenges like misinformation and polarization (arguably resulting from their AI recommendation objectives). I’ve also kept running against the same blockers when trying to get change to happen. They aren’t what many people expect—often the biggest blocker has nothing directly to do with $$$ or advertisers or even eyeballs2—it's about fear.

Platforms are scared (for good reason) that influential stakeholders will make their life very difficult if they make changes that hurt those stakeholders or their constituents. These stakeholders include factions in political battles all over the world who run the governments where the platforms operate—and where platform decisions can impact who wins those battles. Platform stakeholders also include powerful and conflicting (and important) civil rights organizations like the American Civil Liberties Union (ACLU) which fights for free speech and the Anti-Defamation League (ADL) which fights against hate speech.

Problems like misinformation, polarization, and hate speech are just hard, and there are no 100% clear answers—so there is essentially nothing a platform can decide that will gain broad trust of powerful stakeholders or the general public. Even if Google or Meta were non-profits, it would be hard for people to trust their actions on such important issues. This leaves the platforms stuck—it means the best thing for them to do (for their self-preservation) is often to take the minimal possible action.

That’s the platform perspective. That sucks for them. But it also sucks for us.

Platforms impact billions with their decisions. Right now, those decisions are primarily in the hands of corporations and unaccountable CEOs—and are heavily influenced by pressure from those partisan politicians and authoritarian states aiming to entrench their power. Better answers to the question of “Who decides?” about platform actions are crucial, as platforms have power over what speech is allowed, how it flows, etc.—and control over these has huge impacts on the viability of democracies and economies.

So who should decide what platforms do about issues like polarization, hate speech, and misinformation? The ACLU or ADL? Republicans or Democrats? Ceos or authoritarian dictators?

I would love it if we could just chuck it all and decentralize everything instead, but it turns out that doesn’t actually help solve most problems. Instead, I’ve spent much of the last few years trying to find the holy grail—a way to get platforms unstuck in a way that benefits all of us. It turns out there is something that might just do the job!

To understand that path, let’s first take a moment to imagine a world where democracy might be working. (This world!)

Context: A reinvention of democracy

Did you know that the European Union convened 800 people, chosen by lottery to be representative from across EU countries, to help decide the future of Europe? The representatives matched the makeup of the EU as a whole (via sortition) and didn’t just spout off their gut opinions, or check boxes in a survey. They spent their weekends deliberating together with facilitation support, learning from experts and each other and drafting and proposing recommendations. People came from 27 countries, speaking 24 languages, with simultaneous translation, compensation for time, etc.—and their work may shape the future of a continent.

This new kind of one-off representative deliberative process worked so well that the EU is going to institutionalize this—to make such democratic bodies a core component of EU decision-making. Similar processes have had success around the world—combining democratic lotteries and deliberation to navigate complex issues from climate change in France to residential zoning in Australia.

These processes are often called citizen assemblies, citizen panels, or citizen juries. The video from The Economist below shows why (and these incredible resources by Claudia Chwalisz’s team at the OECD go into the weedy details). In the current age of low trust, strong democratic mandates are hard to come by—and these approaches may provide that.

A path forward: ‘Platform Democracy’

But what does this have to do with technology and governance? It provides a viable alternative to a broken status quo. What if platform decisions about speech and censorship, algorithms and policies, were not decided by CEOs but by the people who they impact? I call this general concept platform democracy—it is an ideal to strive for.

Platform democracy: governance of the people, by the people, for the people—except within the context of a digital platform instead of a physical nation.

When I put it that way it perhaps sounds crazy or at least naive. But to be clear, I don’t (currently) advocate for the impractical, e.g. getting rid of tech company leadership and replacing them with “platform democracy.” What I believe is achievable is using democratic processes that have already been validated around the world to move us significantly closer to that ideal. To gradually shift power over collective decisions into the hand of the people that those decisions impact. Devolution of power can be both incremental and revolutionary at the same time.

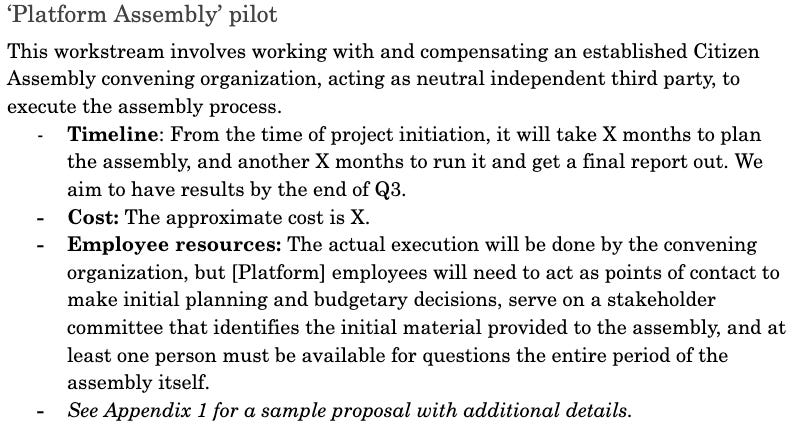

One specific approach for implementing aspects of platform democracy is the platform assembly—this is analogous to the EU process described earlier.

A platform assembly can be thought of as a sort of an “on-demand legislature for platform decision-making, based on the citizen assembly model.”

The image below provides an overview of how it could work:

Why would Facebook or YouTube or Twitter do this? Because platform democracy gets them unstuck! If they can say that a decision was made by a democratic process—honestly say that because it is true—then it can make it much harder for stakeholders to attack it and it can build public trust. Yes, it means they give up some power, but for many decisions, this is still a very good trade.

You might also have a bunch of reservations about the EU convening approach itself. Everything from “Who has time for this sort of thing?” to “You think people chosen at random will make good decisions?” to even “How is this even democracy?”. All of these questions have fairly decent answers, and this would need to be book-length for me to get into all of the gory details. However, a paper I wrote as a fellow at the Harvard Kennedy School on platform democracy provides far more detail and references. (If you prefer podcasts, I go into all of the standard questions here with Mike Masnick.)

Conversations with platforms

For the past several years I’ve been in talks with platforms to get such approaches adopted, at least as a pilot, and for the past year or so I’ve also been working directly with the community of organizations that implement these processes for governments. It is frankly incredible what these organizations have been doing—essentially reinventing democracy for the modern era and getting powerful governments around the world on board.

But my goal wasn’t to get a government on board—it was to matchmake a platform facing a controversial decision where it felt stuck (e.g. “What do I do about political ads?”) with an organization that can run a democratic process that not only gets it unstuck—but does so by putting (at least some) of the power in the hands of the people.

Platform adoption

Almost everyone told me this was crazy, but within a year, two platforms got on board. (Well, two that I can talk about right now.) I teased the Twitter story early, so let me elaborate on that first, now that you finally have the context. As far as I know, this is the first public mention of Twitter’s (former) platform democracy ambition.

The Twitter tragedy

Last winter I started making headway advocating for such a process at Twitter, and after many months of back-and-forth, I was able to connect them with a citizen assembly convener, newDemocracy. Here’s an excerpt from the explanation I provided to Twitter, with a few details removed.

One of the potential questions that the platform assembly would have helped Twitter answer was:

What should we do about potential misinformation that is flagged by 3rd party checkers, given our need to balance free expression with our other values and responsibilities?

The plan that was decided on in March was to run the pilot assembly such that it would complete its work by the end of September, in time to significantly shape the roadmap for the following year. The team in charge of this secured the necessary approvals and resources, got buy-in from cross-functional teams, etc. I was told that just about everyone who was briefed on this was enthusiastic about it. The hope was that if a small-scale regional pilot assembly worked well, that could start the wheels moving toward global assemblies tackling many of Twitter’s thorniest problems.

Unfortunately, this was when Elon Musk made the Twitter takeover bid in April—and that put everything on indefinite hold. Soon after Musk arrived, everyone involved was being laid off and I got permission to share this story. It currently seems unlikely that Elon’s Twitter will continue with this vision, at least at the moment. But Elon, if you are reading this, feel free to reach out and this can continue where it left off! More generally, I’m always happy to hear from people interested in making this happen at their organization.

The Facebook pilots

While the effort with Twitter fell flat, Meta/Facebook was exploring a similar path—and it kept going. Facebook has now run two pilots across five countries, asking participants to deliberate on the following question:

“What to do about problematic climate information on the Facebook platform?”

These pilots were not quite what I would call a “true platform assembly” (unlike what Twitter was planning to run) but they are very exciting in spite of that. They are the first example I am aware of a representative, informative, and impactful democratic process run by a global platform (representative within the countries they operated in). These pilots are also a stark contrast to Facebook’s useless referendum experiment in 2009. You can read more about them at Casey Newton's Platformer (and as an observer, I hope to share more on them in future issues).

The pilots went so well that the only question was what Meta/Facebook would do next. While I had pushed for something closer to a truly global platform assembly of 300-500 people (providing full agency and much more time to assembly members) that was not to be, at least not yet. Instead, Facebook decided to go big. Very big.

Meta’s Mammoth “Community Forum”

This newsletter is being delivered to your inbox shortly after Meta announces its grand plan for scaling up these pilots. Meta will soon run a giant global deliberative process based on the Deliberative Polling approach—what it calls a “Community Forum”. It will involve almost 6000 people, representative across 32 countries, making decisions that will impact the future of the company’s biggest bet.

To me, this is incredibly exciting. Yes, I do have significant reservations about the specific approaches (which I look forward to sharing). I have been embedded in the process of setting this up and there is a lot to say—the devil of democracy is in the details. I am cautiously hopeful that this is just a first stab at the (extraordinary) operational challenges of meaningful and inclusive global platform governance.

I'll leave most of that for future pieces, both here and in other channels. For now, I think it's worth just appreciating how revolutionary this is. Nothing quite like it has ever been done before to help set policy for a company. It's not leadership, shareholders, employees, or elites who are being asked for their input. It's everyday people, matching the makeup of 32 countries, across every region of the world, supported with context, and compensated for their time.

I'm looking forward to seeing what they decide.

Learn more at my Harvard Belfer paper on platform democracy or podcast.

Future issues will also cover other topics I’ve worked on over the past decade including algorithms that bridge divides, complementary approaches to platform democracy (e.g. digital tools like Polis and Remesh), and especially ensuring that generative AI has a positive impact on society.

Share this with people who might find it interesting—and tag me if you share on social media: I’m @metaviv, aviv@mastodon.online, and Aviv Ovadya on LinkedIn.

Stay in touch by following me on any of those platforms, reaching out at aviv@aviv.me, and of course, subscribing.

Thanks to far more people and communities than I can list here for their time and insights that have enabled this work, including Iain Walker, Kyle Redman, Rahmin Sarabi, Luke Thorburn, Yago Bermejo, Linn Davis, Peter MacLeod, Adam Cronkite, Claudia Chwalisz, Ieva Cesnulaityte, Helene Landemore, James Fishkin, Alice Siu, Antoine Vergne, Shahar Avin, David Krueger, Stephen Larrick, Leisel Bogan, Afsaneh Rigot, Amritha Jayanti, Nathan Schneider, Bee Cavello, Joe Edelman, Colin Megill, Andrew Konya, Robyn Caplan, Eli Pariser, and the many employees I can’t name fighting for democracy across the tech industry.

More precisely, I’m a visiting scholar at the University of Cambridge’s LCFI (though primarily based in the Boston area) and an affiliate at Harvard’s Berkman Klein Center for Internet & Society. I also consult for civil society organizations, aligned technology companies, and funders.

Profit-seeking can obviously still be a significant obstacle to platform change, but when it comes to issues like harassment, misinformation, and polarization it often ends up playing a smaller role. The other dominant obstacles include operational factors, external limitations, and meaning—some of which I hope to cover in a later issue.

Hi Aviv, I've just stumbled across your work and find it inspiring.

I agree that your work is important from the perspective of global ramifications of mass media, media platforms and AGI.

The challenges and threats you identify are also relevant to existing non-tech related power imbalances at the center of many other issues of social concern.

One example is how to tackle social problems around addictions and mobilising those in the problem to be a large part of the solution in the face of fear, prejudice and stigma.

Thank you for this, I've been wondering how technology can actually make us participatory in our communities and societies and further engaged with one another - and this feels like a huge piece of that puzzle.

The other being, how to code AI to be the best of humanity vs the worst of it.

If you have a moment, it'd be great to share the discussions that we in the theatre world have been having on how our knowledge system holds some of the pieces of this puzzle as well

Jehan Manekshaw.

Co-Curator, Unrehearsed Futures @ the Drama School Mumbai